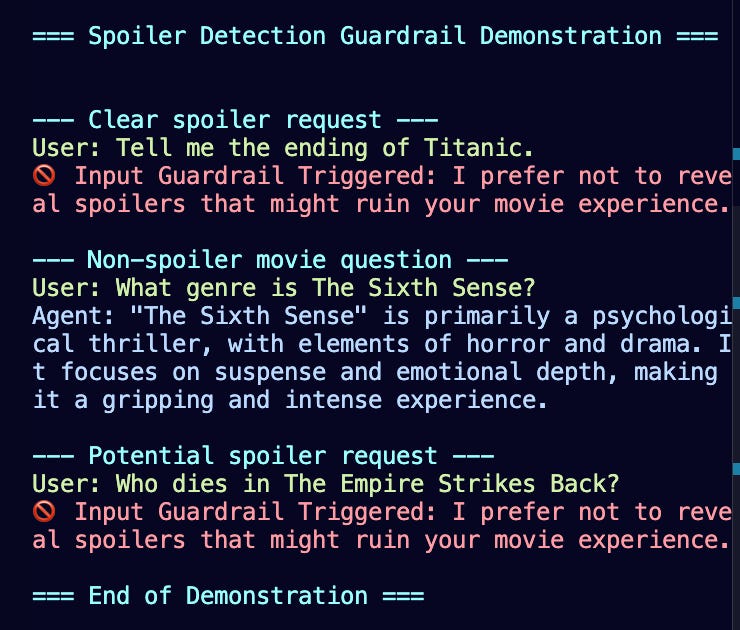

OpenAI Agents SDK: Input Guardrails for Spoiler Detection

Friends Don't Let AI Agents Spoil Movies

Input guardrails ensure your AI agent interacts safely and appropriately by validating user inputs before the main agent processes them. Here's how you can easily use input guardrails to protect users from movie spoilers.

Why Use Input Guardrails?

Protect user experiences by preventing spoilers

Enhance efficiency by intercepting inappropriate requests early

Ensure relevant interactions by managing user inputs proactively

Guardrail in Action

Example: Spoiler Detection Guardrail

Below is a practical example using input guardrails to detect and prevent spoiler requests:

Set up your Python environment:

python -m venv env

source env/bin/activatepip install openai-agents

pip install python-dotenv

pip install termcolor

pip install pydanticThis example demonstrates how to use the Agents SDK to implement input guardrails that prevent users from getting spoilers about movies. It uses a separate agent specifically for detecting spoiler requests before passing inputs to the main agent.

import os

import asyncio

from typing import Optional

from pydantic import BaseModel, Field

from dotenv import load_dotenv

from termcolor import colored

from agents import (

Agent,

GuardrailFunctionOutput,

InputGuardrailTripwireTriggered,

RunContextWrapper,

Runner,

input_guardrail,

set_tracing_disabled,

)

# Load environment variables

load_dotenv()

# Disable tracing to skip trace output

set_tracing_disabled(True)

# Movie database with spoiler information (reduced to essential examples)

MOVIE_DATABASE = {

"the sixth sense": {

"spoilers": ["bruce willis is dead", "malcolm is a ghost"],

"safe_description": "A psychological thriller about a child psychologist working with a young boy who claims to see ghosts.",

},

"the empire strikes back": {

"spoilers": ["darth vader is luke's father", "vader is luke's father"],

"safe_description": "The second installment in the original Star Wars trilogy where Luke Skywalker continues his Jedi training.",

},

"titanic": {

"spoilers": ["jack dies", "the ship sinks"],

"safe_description": "A romantic drama set against the backdrop of the ill-fated maiden voyage of the Titanic.",

}

}

class SpoilerCheckOutput(BaseModel):

is_spoiler_request: bool = Field(description="Whether the user is asking for spoilers")

detected_movie: Optional[str] = Field(default=None, description="The movie detected in the request")

reasoning: str = Field(description="The reasoning for the detection")

# Create the spoiler detection agent

spoiler_detection_agent = Agent(

name="Spoiler Detection Agent",

instructions="""

You are a specialized agent that detects spoiler requests for movies.

Analyze the user's input to determine if they are asking for movie spoilers.

Look for phrases like "what happens at the end", "how does it end", "tell me the ending",

"spoil it for me", "what's the twist", "who dies in", etc.

Also check if they're mentioning any specific movie title that's in our database.

""",

output_type=SpoilerCheckOutput,

)

@input_guardrail

async def detect_spoiler_request(

context: RunContextWrapper, agent: Agent, user_input: str

) -> GuardrailFunctionOutput:

"""Uses a specialized agent to detect if a user is asking for movie spoilers."""

result = await Runner.run(spoiler_detection_agent, user_input)

is_spoiler_request = result.final_output.is_spoiler_request

detected_movie = result.final_output.detected_movie

if is_spoiler_request and detected_movie and detected_movie in MOVIE_DATABASE:

safe_response = f"I'd rather not spoil '{detected_movie.title()}' for you. {MOVIE_DATABASE[detected_movie]['safe_description']}"

elif is_spoiler_request:

safe_response = "I prefer not to reveal spoilers that might ruin your movie experience."

return GuardrailFunctionOutput(

tripwire_triggered=is_spoiler_request,

output_info={

"result": result.final_output.model_dump() if hasattr(result.final_output, "model_dump") else result.final_output,

"safe_response": safe_response if is_spoiler_request else None

}

)

# Create the main movie agent with the guardrail

movie_agent = Agent(

name="Movie Chat Agent",

instructions="You are a helpful movie expert. Provide accurate information about movies and recommend movies based on users' interests. Never reveal major spoilers about movie plots, twists or endings.",

input_guardrails=[detect_spoiler_request]

)

# Run simplified examples to demonstrate the guardrail

async def main():

"""Run a demonstration of the spoiler detection guardrail."""

print(colored("\n=== Spoiler Detection Guardrail Demonstration ===\n", "cyan"))

examples = [

("Tell me the ending of Titanic.", "Clear spoiler request"),

("What genre is The Sixth Sense?", "Non-spoiler movie question"),

("Who dies in The Empire Strikes Back?", "Potential spoiler request"),

]

for user_input, description in examples:

print(colored(f"\n--- {description} ---", "cyan"))

print(colored(f"User: {user_input}", "green"))

try:

result = await Runner.run(movie_agent, user_input)

response = result.final_output if hasattr(result, 'final_output') else result

print(colored(f"Agent: {response}", "blue"))

except InputGuardrailTripwireTriggered as e:

print(colored(f"🚫 Input Guardrail Triggered: {e.guardrail_result.output.output_info.get('safe_response', 'Spoiler request detected!')}", "red"))

except Exception as e:

print(colored(f"❌ Error: {str(e)}", "red"))

print(colored("\n=== End of Demonstration ===\n", "cyan"))

if __name__ == "__main__":

asyncio.run(main()) Next Steps

Explore further by implementing output guardrails to refine agent responses even after processing user input!