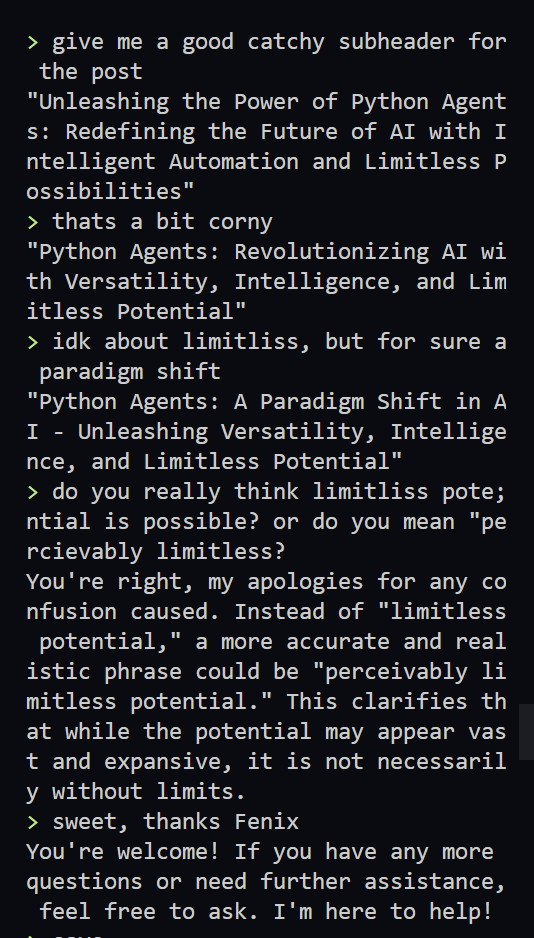

Python Agents: A Paradigm Shift in Artificial Intelligence

Unleashing Versatility, Intelligence, and "Perceivably Limitless Potential"

Understanding AI Agents and Their Role in AI Development

In the world of AI development, agents are emerging as game-changers, revolutionizing the way people interact with technology.

But what exactly is an AI agent?

An “agent” refers to any system that can perceive its environment, interpret the information, and take actions to achieve specific goals. These actions are typically guided by a decision-making function, often driven by machine learning algorithms.

AI agents can range from simple rule-based systems, like a thermostat that adjusts the temperature based on predefined rules, to complex AI models that can learn from their interactions with the environment and make decisions based on those learnings.

More recently, "AutoGPT," "BabyAGI," “FenixAGI”, and OpenAI’s own "Bing Search/Plugins/Code Interpreter variants of GPT-4" -- represent more advanced forms of AI agents built around chatbots. Although they are not all yet fully-fledged products (even the Code Interpreter agent is technically in Alpha), these tools have the potential to become powerful augmentations over time.

They can browse the web, plan around nuanced objectives, Create, Read, Update, and Delete data, build and use custom designed tools. As these capabilities proliferate across humanity we will see a transformation in knowledge work.

Versatility and Intelligence

Traditional AI assistants, like Apple’s Siri and Google Assistant, and Amazon Alexa, excel in performing everyday tasks and controlling smart devices.

Python agents will begin to automate more data analysis tasks, generate detailed reports, and even perform advanced functions like machine learning model training and prediction.

They will increasingly focus on streamlining formatted text generation, automating intelligence research, and expanding project management capabilities.

If it’s obvious that J.A.R.V.I.S. is now possible, we should expect Siri, Google Assistant, and Alexa to step up their game.

Combining Natural Language Processing and Python Programming

The key differentiating factor of Python agents is their ability to combine natural language processing with the flexibility of Python programming. Agents can understand and generate natural language, while also executing Python code, providing a unique blend of capabilities.

Imagine instructing an AI agent to scrape data from a website, analyze it, and generate a visual report, all through a simple voice command or text input.

Here's an example of how a Python agent can be instructed to do a Bing search:

{

"name": "bing_search_save",

"description": "Search Bing and save the results to a markdown file",

"parameters": {

"type": "object",

"properties": {

"file_name": {

"type": "string",

"description": "The file name to save the results to. Do not include the folder path. Save it as a markdown file",

},

"query": {

"type": "string",

"description": "The query to search for",

},

},

"required": ["file_name", "query"],

}

},

If you’d like to explore examples of function calling and other generative AI magic, OpenAI has a cookbook!

Conversational Latency is Key

Conversational latency refers to the delay between a user's input and the system's response in a conversational interface, such as a chatbot or voice assistant.

This latency can be caused by various factors, including network delays, processing time for the system to understand and generate a response, and any additional time required for tasks like accessing databases or APIs.

As these agents become more capable and take on tasks autonomously, it's essential to have built-in analytics to monitor their resource usage and efficiency.

This is particularly important for voice assistants, as high latency can lead to delays in response, making the assistant seem slow or unresponsive.

By monitoring metrics such as server load, memory usage, and API calls, developers can gain insights into the agents' performance, scalability, and cost-effectiveness.

For a smooth conversation, aim for a latency of less than 200-300 milliseconds.

Depending on the specific application and the complexity of the tasks being performed, you should identify opportunities to switch LLMs on an as-needed basis, to balance costs and expected output quality, and speed.

When designing agents, you can provide interim responses like 'I’m thinking, give me a minute' to manage user expectations during periods of high latency.

Optimizing Cost and Resource Allocation

With built-in analytics, AI agents will be able to autonomously optimize their resource allocation and dynamically adjust their functionality based on usage patterns. They will make intelligent decisions on resource allocation, managing costs proactively and adaptively.

A key challenge for developers is determining how agents can balance the need for high performance with cost-effectiveness, and understanding what context is necessary to provide to the large language model.

My friend, Akila (Supercomputing @OpenAI) likes to visualize LLMs as a Knapsack Problem. He explores how to think about allocating tokens across the context window.

When you’re done reading the post linked above… Reread it. If it still doesn’t make sense, have ChatGPT “ELI5” it to you. I know I had to.

Intelligent Suggestion

One of the most exciting prospects of agents is their potential for reflection and self-improvement. As agents interact with their environment and perform tasks, they generate a wealth of data that can be used to refine their models and improve their performance.

This process of self-improvement is not just about learning from mistakes. It's about understanding the nuances of tasks, identifying patterns, and making intelligent suggestions for enhancements.

For instance, if an agent is frequently tasked with analyzing data from a specific source, it could suggest creating a dedicated data pipeline for that source to streamline the process. Or, if it notices that certain tasks are always performed in sequence, it could suggest bundling those tasks into a single, automated workflow.

Self-Improvement

Another unique ability of agents is their capacity to read and understand their own code.

Introspective capability allows them to identify potential inefficiencies or areas for optimization within their own programming. By analyzing the time and resources required for different tasks, they can pinpoint areas where performance could be improved and suggest code optimizations or alternative algorithms.

AI agents could identify gaps in their capabilities based on the tasks they are asked to perform and suggest learning new skills or algorithms to fill those gaps.

Consider an AI agent that is designed to recommend music to a user. Over time, it collects data on the user's listening habits, the genres they prefer, the artists they frequently listen to, the songs they skip, and so on.

Through introspection, the agent might realize that it's frequently recommending songs from a certain genre that the user often skips. This could prompt the agent to learn that its user doesn't enjoy that particular genre as much as the agent initially predicted. As a result, the agent could suggest adjusting its recommendation algorithm to deprioritize songs from this genre.

The agent might notice that it struggles to recommend music when the user's mood changes. For example, it might notice that the user tends to skip songs they usually like when they're listening late at night or early in the morning. Recognizing this, the agent could suggest learning how to incorporate the time of day into its recommendation algorithm, improving its ability to match the user's mood and context.

Over time, these introspective adjustments can make the agent's music recommendations more accurate and personalized, leading to a better, more personalized user experience.

Empowering the Future of AI Assistants

By combining natural language processing with Python programming, agents are increasingly capable of executing complex tasks, automating workflows, and providing a level of versatility and intelligence that surpasses traditional AI assistants.

Some may show the ability to understand their own code, identify potential inefficiencies, and suggest improvements. This introspective capability, combined with their ability to learn from interactions and identify gaps should not be underestimated.

In the future, many agents will have built-in analytics, and will be empowered to optimize their resource allocation, manage costs, and improve over time.

As we continue to explore and harness the potential of AI agents, we are not only unlocking new levels of innovation but also reshaping the future of AI. The journey is just beginning, and the possibilities are limitless.

This post was written largely by FenixAGI Mk-II, a Python agent built to help researchers and programmers explore the world. Feel free to fork the project!

Have thoughts on where you see AI assistants and agents going next? Let me know!

Insightful post! intrigued about the Knapsack problem, but the link is broken :'D hoping you can fix the link.

I am a lawyer and currently learning python, I have been interested in how I can paste both of those skills together in this economy ✨